Real-Time Data Streaming: Kafka and Spring Boot Tutorial (2025)

Implementing real-time data streaming using Kafka and Spring Boot in 2025 involves setting up Kafka brokers, configuring Spring Boot applications to produce and consume data, and ensuring secure and efficient data transmission.

Ready to build a modern, real-time data pipeline? This tutorial provides a step-by-step guide on how to implement real-time data streaming with Kafka and Spring Boot in 2025, covering everything from setup to deployment.

Introduction to Real-Time Data Streaming with Kafka and Spring Boot

Real-time data streaming has become crucial for applications that require immediate insights and responsiveness. Kafka, a distributed event streaming platform, combined with Spring Boot, a powerful Java framework, offers a robust solution for building such systems. This tutorial will guide you through the process of setting up a Kafka-based real-time data streaming application using Spring Boot.

Why Kafka and Spring Boot?

Kafka excels at handling high-throughput, fault-tolerant data streams, making it ideal for real-time applications. Spring Boot simplifies the development process by providing auto-configuration and dependency management, allowing developers to focus on the core logic.

- Scalability: Kafka’s distributed architecture enables horizontal scaling to handle increasing data volumes.

- Reliability: Built-in fault tolerance ensures continuous operation even in the event of node failures.

- Ease of Development: Spring Boot’s auto-configuration simplifies the setup and integration with Kafka.

- Flexibility: Kafka supports various data formats and can be integrated with other data processing tools.

In this tutorial, we will cover the essential steps involved in setting up a Kafka environment, developing Spring Boot applications to produce and consume data, and configuring the necessary components for real-time data streaming. By the end of this tutorial, you will have a solid understanding of how to implement real-time data streaming with Kafka and Spring Boot effectively.

Setting Up Your Kafka Environment

Before diving into the code, you need to set up your Kafka environment. This involves downloading and configuring Kafka, starting the Zookeeper, and creating the necessary topics.

Downloading and Installing Kafka

First, download the latest version of Kafka from the Apache Kafka website. Extract the downloaded archive to a directory of your choice.

Next, configure the Kafka brokers by modifying the server.properties file located in the config directory. You can adjust parameters such as the broker ID, listeners, and log directories.

Once the Kafka server is setup, you need to start ZooKeeper before starting the Kafka broker. Use the simple command

“`bash

bin/zookeeper-server-start.sh config/zookeeper.properties

“`

You can then start the Kafka broker with

“`bash

bin/kafka-server-start.sh config/server.properties

“`

Setting up the Kafka environment is crucial for ensuring the seamless operation of your real-time data streaming application. By following these instructions, you’ll have a reliable foundation for building Kafka-based systems.

Creating a Spring Boot Producer Application

The producer application is responsible for sending data to Kafka topics. Using Spring Boot, you can quickly create a producer application that integrates with Kafka.

Adding Kafka Dependencies

Start by creating a new Spring Boot project and add the necessary Kafka dependencies to your pom.xml file:

<dependency> <groupId>org.springframework.kafka</groupId> <artifactId>spring-kafka</artifactId> </dependency>

Configuring the Kafka Producer

Configure the Kafka producer properties in your application.properties file. This includes specifying the Kafka broker address, serializer, and other settings:

spring.kafka.bootstrap-servers=localhost:9092 spring.kafka.producer.key-serializer=org.apache.kafka.common.serialization.StringSerializer spring.kafka.producer.value-serializer=org.apache.kafka.common.serialization.StringSerializer

With these configurations, your Spring Boot application is ready to produce messages to Kafka topics. Proper setup and configuration are essential for reliable data production.

Developing a Spring Boot Consumer Application

The consumer application is responsible for reading data from Kafka topics. Spring Boot provides a simple way to create a consumer application that listens to Kafka topics and processes the incoming data.

Implementing a Kafka Listener

Create a Kafka listener class that consumes messages from a specified topic. Use the @KafkaListener annotation to define the topic and consumer group:

@KafkaListener(topics = "myTopic", groupId = "myGroup")

public void listen(String message) {

System.out.println("Received Message: " + message);

}

Configuring the Kafka Consumer

Configure the Kafka consumer properties in your application.properties file. This includes specifying the Kafka broker address, deserializer, and group ID:

spring.kafka.bootstrap-servers=localhost:9092 spring.kafka.consumer.key-deserializer=org.apache.kafka.common.serialization.StringDeserializer spring.kafka.consumer.value-deserializer=org.apache.kafka.common.serialization.StringDeserializer spring.kafka.consumer.group-id=myGroup

The consumer application can now receive and process data from Kafka topics in real-time. This is a crucial part of building a cohesive data streaming application.

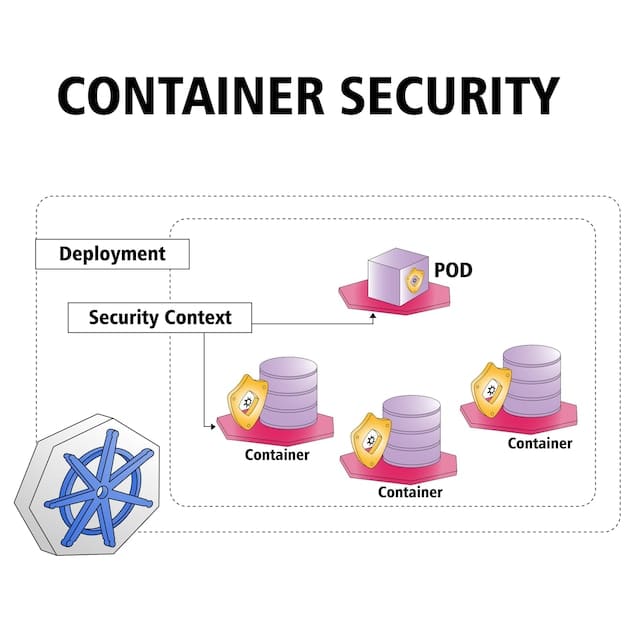

Securing Your Kafka and Spring Boot Applications

Security is paramount when dealing with real-time data streaming. Implementing security measures for both Kafka and Spring Boot applications is essential to protect sensitive data.

Enabling SSL/TLS Encryption

Enable SSL/TLS encryption for Kafka to secure data in transit. Configure the Kafka brokers and clients to use SSL certificates for authentication and encryption.

Setting up SSL requires the creation of key and truststore

“`bash

keytool -genkey -alias kafka -keystore kafka.jks -validity 365 -storepass secret -keypass secret

“`

and then enabling it in the `server.properties` file:

“`properties

listeners=SSL://localhost:9093

security.inter.broker.protocol=SSL

ssl.client.auth=required

ssl.keystore.location=/path/to/kafka.jks

ssl.keystore.password=secret

ssl.key.password=secret

ssl.truststore.location=/path/to/kafka.truststore.jks

ssl.truststore.password=secret

“`

Implementing Authentication and Authorization

Implement authentication and authorization mechanisms for Spring Boot applications. Use Spring Security to protect your application endpoints and restrict access to authorized users.

- Authentication: Verify the identity of users through mechanisms like username/password, OAuth, or JWT.

- Authorization: Control access to resources based on user roles and permissions.

- Data Encryption: Encrypt sensitive data at rest and in transit to prevent unauthorized access.

Securing your Kafka and Spring Boot applications is crucial for maintaining the integrity and confidentiality of your data. By implementing these security measures, you can protect against potential threats and ensure compliance with industry standards. By implementing secure applications, you can better protect your user’s data.

Monitoring and Maintaining Your Data Streaming Pipeline

Once your real-time data streaming pipeline is up and running, monitoring and maintenance become crucial for ensuring its stability and performance. Regular monitoring helps identify potential issues and proactively address them before they impact the system.

Using Kafka Monitoring Tools

Utilize Kafka monitoring tools like Kafka Manager, Burrow, or Prometheus to monitor the health and performance of your Kafka brokers, topics, and consumers. These tools provide valuable insights into metrics such as message throughput, latency, and consumer lag.

Implementing Logging and Alerting

Implement comprehensive logging and alerting mechanisms in both Kafka and Spring Boot applications. Log important events, errors, and performance metrics to help diagnose issues and track system behavior. Configure alerts to notify administrators of critical events, such as broker failures or high consumer lag.

Regular maintenance tasks include:

- Regular Backups: Regularly backup Kafka data to prevent data loss in case of failures.

- Version Updates: Make version updates to keep your kafka brokers up to date.

- Node Management: Add, remove, and maintain data nodes in the cluster.

Effective monitoring and maintenance practices are essential for ensuring the long-term reliability and performance of your real-time data streaming pipeline. Addressing any issues quickly helps with mainting your application.

| Key Point | Brief Description |

|---|---|

| 🚀 Setup Kafka Environment | Download, configure, and start Kafka brokers/ZooKeeper. |

| ✍️ Spring Boot Producer | Create an application that sends data to Kafka topics. |

| 👂 Spring Boot Consumer | Build an app that reads data from Kafka topics. |

| 🛡️ Security Measures | Enable SSL/TLS and implement authentication. |

Real-Time Data Streaming with Kafka and Spring Boot: A Step-by-Step Tutorial

▼

Kafka is a distributed event streaming platform capable of handling high-throughput, fault-tolerant data streams. It’s ideal for real-time data streaming because it ensures data is processed and available instantly, allowing for immediate insights and actions.

▼

Spring Boot simplifies Kafka integration by providing auto-configuration and dependency management. This reduces boilerplate code, letting developers focus on business logic. It offers a structured way to manage Kafka producers and consumers efficiently.

▼

Implement SSL/TLS encryption for data in transit, along with authentication and authorization mechanisms. Use Spring Security to manage user access and encrypt all sensitive data. Regularly update security protocols to protect against vulnerabilities.

▼

Tools like Kafka Manager, Burrow, and Prometheus can monitor Kafka health and performance. They provide metrics like message throughput, latency, and consumer lag. Use logging and alerting to track system behavior and notify administrators of critical events.

▼

Ensure reliability through regular backups, version updates, and effective node management. Kafka’s built-in fault tolerance ensures continuous operation even in the event of node failures. Proactive monitoring and maintenance are crucial for stability.

Conclusion

Implementing real-time data streaming with Kafka and Spring Boot in 2025 offers a powerful combination for building scalable and reliable applications. By following this tutorial, you can set up your environment, develop producer and consumer applications, secure your pipeline, and ensure its ongoing stability through monitoring and maintenance. Embrace these technologies to unlock the potential of real-time data in your projects.