AI Ethics in Practice: 5 Steps for U.S. Companies by Q2 2025

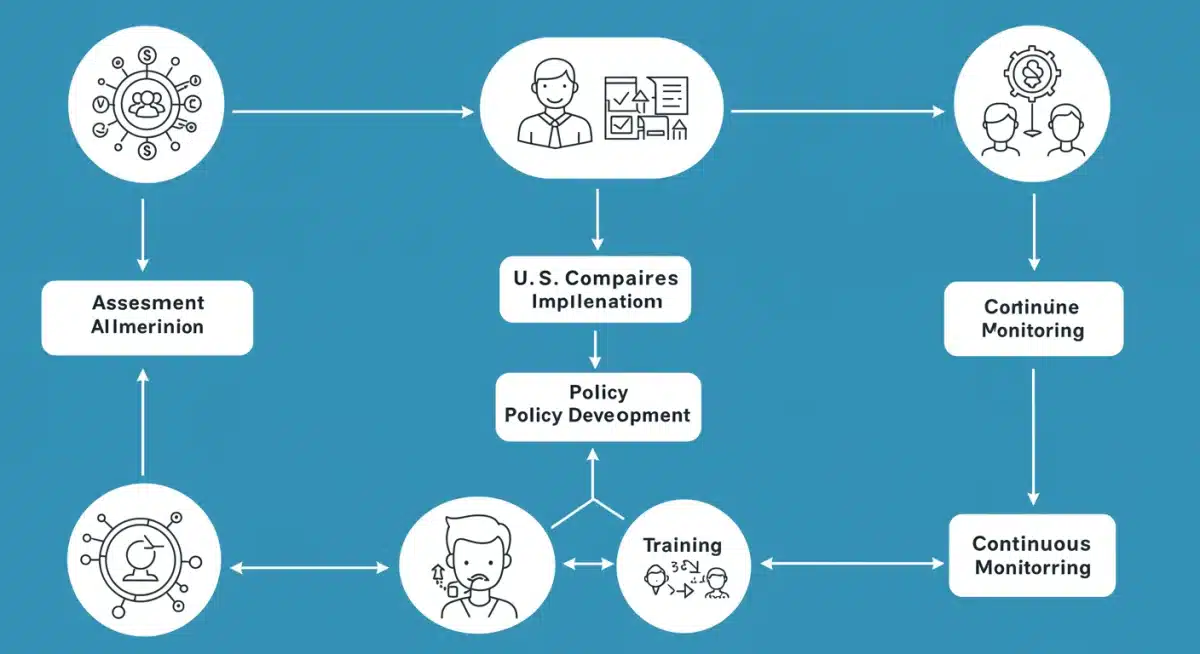

U.S. companies must implement five critical steps by Q2 2025 for responsible AI development, focusing on practical solutions and recent updates to ensure ethical digital growth and mitigate risks in an evolving technological landscape.

AI Ethics in Practice: 5 Steps U.S. Companies Can Implement by Q2 2025 to Ensure Responsible Digital Development (PRACTICAL SOLUTIONS, RECENT UPDATES) is quickly becoming a non-negotiable imperative for businesses across the United States. As AI integration accelerates, the ethical implications of these technologies demand immediate and actionable strategies. This report details the crucial steps U.S. companies must take to build trust, ensure fairness, and foster sustainable innovation in the digital realm.

The Urgency of Ethical AI in the U.S. Business Landscape

The rapid proliferation of artificial intelligence across industries has brought unprecedented opportunities, but also significant ethical challenges. U.S. companies are now facing increased scrutiny from regulators, consumers, and employees regarding the responsible development and deployment of AI systems. Addressing these concerns is not merely a compliance issue but a fundamental component of long-term business sustainability and reputation.

Recent incidents involving algorithmic bias, data privacy breaches, and opaque decision-making have highlighted the tangible risks associated with unchecked AI development. These events underscore the immediate need for robust ethical frameworks that guide innovation. Companies that proactively integrate ethical considerations into their AI strategies will be better positioned to navigate future regulatory landscapes and maintain public trust.

Step 1: Establish a Dedicated AI Ethics Committee and Governance Framework

By Q2 2025, every U.S. company leveraging AI should have a clearly defined AI ethics committee and a comprehensive governance framework in place. This committee should comprise diverse stakeholders, including technical experts, ethicists, legal counsel, and representatives from affected user groups. Its mandate must extend beyond mere advisory roles to include active oversight and policy enforcement.

The governance framework should outline clear lines of responsibility, decision-making processes, and reporting mechanisms for all AI-related projects. This includes establishing ethical principles tailored to the company’s specific operations and the types of AI systems being developed. Without a centralized body and a structured approach, ethical considerations risk being fragmented or overlooked amidst rapid development cycles.

Defining roles and responsibilities

- Appoint a Chief AI Ethics Officer or equivalent to lead the committee.

- Establish cross-functional teams to integrate ethical reviews into the AI development lifecycle.

- Mandate regular reporting on AI ethics initiatives to senior leadership and the board.

Implementing this foundational step ensures that ethical considerations are embedded from the initial stages of AI conception through deployment and maintenance. It moves beyond ad-hoc discussions to a systematic, accountable approach to responsible AI. The committee will serve as the central hub for addressing new ethical dilemmas as they arise, ensuring consistent application of principles.

Step 2: Develop and Implement Transparent AI Policies and Guidelines

Transparency is paramount in building trust around AI systems. U.S. companies must develop and publicly communicate clear policies and guidelines regarding their AI development and usage by Q2 2025. These policies should address key areas such as data collection, algorithmic decision-making, bias detection and mitigation, and human oversight mechanisms.

These guidelines should be accessible and understandable to both internal teams and external stakeholders. They should specify how the company ensures fairness, accountability, and privacy in its AI applications. Furthermore, companies should commit to explaining how their AI systems make decisions, especially in contexts that significantly impact individuals, such as hiring, lending, or healthcare.

Key policy areas to address

- Data Governance: Outline principles for ethical data sourcing, privacy protection, and data anonymization.

- Algorithmic Fairness: Detail strategies for identifying and mitigating biases in AI models.

- Human Oversight: Define where and when human intervention is required in AI-driven processes.

By establishing these transparent policies, companies not only comply with emerging best practices but also proactively manage expectations and build confidence among their user base. This proactive approach to transparency can differentiate a company in a competitive market increasingly sensitive to ethical concerns.

Step 3: Integrate Ethical AI Training and Education Across the Organization

A governance framework and policies are only effective if the entire organization understands and adheres to them. Therefore, by Q2 2025, U.S. companies must implement mandatory and ongoing ethical AI training programs for all employees involved in AI development, deployment, or decision-making. This training should not be limited to technical teams but extend to legal, marketing, and executive leadership.

The curriculum should cover fundamental AI ethics principles, potential biases, regulatory requirements (including state-specific laws and federal guidelines), and the company’s internal policies. Practical case studies and scenario-based learning can help employees recognize and address ethical dilemmas in their daily work. This investment in human capital is critical for fostering a culture of responsible AI innovation.

Components of effective AI ethics training

- Foundational principles of fairness, accountability, and transparency.

- Practical tools for bias detection and mitigation in AI models.

- Legal and regulatory landscape relevant to AI in the U.S.

Educating the workforce ensures that every individual contributing to AI initiatives understands their role in upholding ethical standards. This collective understanding is vital for preventing issues before they arise and for responding effectively when challenges emerge. Consistent training fosters a shared commitment to responsible digital development.

Step 4: Implement Robust AI Audit Mechanisms and Impact Assessments

To ensure that AI systems operate ethically and align with company policies, U.S. companies must establish robust audit mechanisms and conduct regular AI impact assessments by Q2 2025. These audits should evaluate AI models for bias, accuracy, security vulnerabilities, and adherence to privacy regulations. This includes both internal audits and, where appropriate, independent third-party assessments.

AI impact assessments should proactively identify and mitigate potential risks before deployment. This involves evaluating the broader societal and individual impacts of an AI system, considering factors such as job displacement, discrimination, and psychological effects. These assessments are crucial for identifying unforeseen consequences and ensuring that AI initiatives contribute positively to society.

Key aspects of AI auditing and impact assessments

- Bias Detection Tools: Utilize specialized software to identify and quantify algorithmic bias.

- Performance Monitoring: Continuously monitor AI models for drift in performance or fairness metrics.

- Stakeholder Consultation: Engage with affected communities during impact assessments to gather diverse perspectives.

Regular auditing provides an objective measure of an AI system’s ethical performance and helps maintain accountability. By systematically evaluating AI’s effects, companies can refine their models and practices, ensuring continuous improvement in their ethical posture. This iterative process is essential for adapting to new ethical challenges and technological advancements.

Step 5: Prioritize Data Privacy and Security in All AI Operations

Data is the lifeblood of AI, and its ethical handling is non-negotiable. By Q2 2025, U.S. companies must elevate data privacy and security to a top priority in all AI operations. This involves implementing stringent data governance practices, robust cybersecurity measures, and adherence to evolving data protection regulations such as CCPA, HIPAA, and emerging federal privacy laws.

Companies must ensure that personal data collected for AI training or operation is handled with the highest level of care, respecting user consent and minimizing data collection to what is strictly necessary. Furthermore, robust security protocols are essential to protect AI systems and the data they process from breaches, manipulation, and unauthorized access. This commitment to data ethics underpins all other ethical AI efforts.

Essential data privacy and security measures

- Privacy-Enhancing Technologies: Implement techniques like differential privacy and federated learning.

- Regular Security Audits: Conduct frequent penetration testing and vulnerability assessments on AI infrastructure.

- Data Minimization: Collect only the data essential for AI model functionality, reducing exposure risks.

A strong focus on data privacy and security not only mitigates legal and reputational risks but also builds fundamental trust with users. When individuals feel their data is protected and used responsibly, they are more likely to engage with AI-powered services. This foundational element is crucial for the widespread adoption and acceptance of AI technologies.

Navigating the Evolving Landscape of AI Regulation

The regulatory landscape for AI in the U.S. is rapidly evolving, with various states and federal agencies proposing and enacting new guidelines. Companies that implement these five steps will be better prepared to meet existing compliance requirements and adapt to future regulations. Proactive engagement with AI ethics ensures that businesses remain ahead of the curve, minimizing potential penalties and fostering a reputation as responsible innovators.

Federal initiatives, such as the AI Bill of Rights Blueprint and ongoing discussions in Congress, signal a growing commitment to regulating AI. Companies that have already established ethical frameworks, transparent policies, and robust auditing processes will find it easier to integrate new mandates. This forward-thinking approach transforms potential compliance burdens into strategic advantages.

Key regulatory considerations

- State-level privacy laws: Understanding and complying with varied state regulations like CCPA and upcoming privacy acts.

- Sector-specific guidelines: Adhering to AI-related guidance from agencies like the FDA, FTC, and EEOC.

- International standards: Monitoring global AI ethics frameworks for potential influence on U.S. policy.

Staying informed and agile in response to regulatory changes is critical. Companies should view compliance not as a static goal but as an ongoing process of adaptation and continuous improvement. The five steps outlined provide a solid framework for navigating this complex and dynamic environment, ensuring long-term responsible digital development.

Key Step |

Brief Description |

|---|---|

AI Ethics Committee |

Establish a diverse committee and governance framework for oversight. |

Transparent Policies |

Develop and communicate clear policies on data, bias, and human oversight. |

Ethical Training |

Implement mandatory, ongoing AI ethics training for all relevant employees. |

Audits & Assessments |

Conduct regular AI audits and impact assessments for bias, accuracy, and societal effects. |

Frequently Asked Questions About AI Ethics for U.S. Companies

The urgency stems from rapid AI adoption, increased regulatory scrutiny, and growing public awareness of issues like algorithmic bias and data privacy. Proactive ethical measures mitigate risks and build trust, essential for sustained growth.

Neglecting AI ethics can lead to significant risks, including reputational damage, legal liabilities from regulatory non-compliance, financial penalties, loss of customer trust, and the development of biased or harmful AI systems that undermine business goals.

An AI ethics committee provides centralized oversight, establishes ethical guidelines, and ensures accountability throughout the AI lifecycle. It facilitates interdisciplinary collaboration, helping to identify and address ethical dilemmas effectively before they escalate.

Transparency builds trust by allowing stakeholders to understand how AI systems function, how data is used, and how decisions are made. Clear, accessible policies help mitigate concerns about fairness and accountability, fostering greater acceptance of AI technologies.

Yes, these steps are crucial for SMBs too, scalable to their operations. While the scope may differ from larger enterprises, the principles of ethical AI, data privacy, and responsible development are universally applicable and essential for all businesses leveraging AI.

What happens next

The imperative for U.S. companies to embed AI ethics into their core operations by Q2 2025 is clear and immediate. Businesses failing to adopt these five foundational steps risk falling behind in both regulatory compliance and public trust. The coming months will likely see increased enforcement and greater consumer demand for ethical AI, making proactive implementation a critical strategic advantage for long-term success in the digital economy.